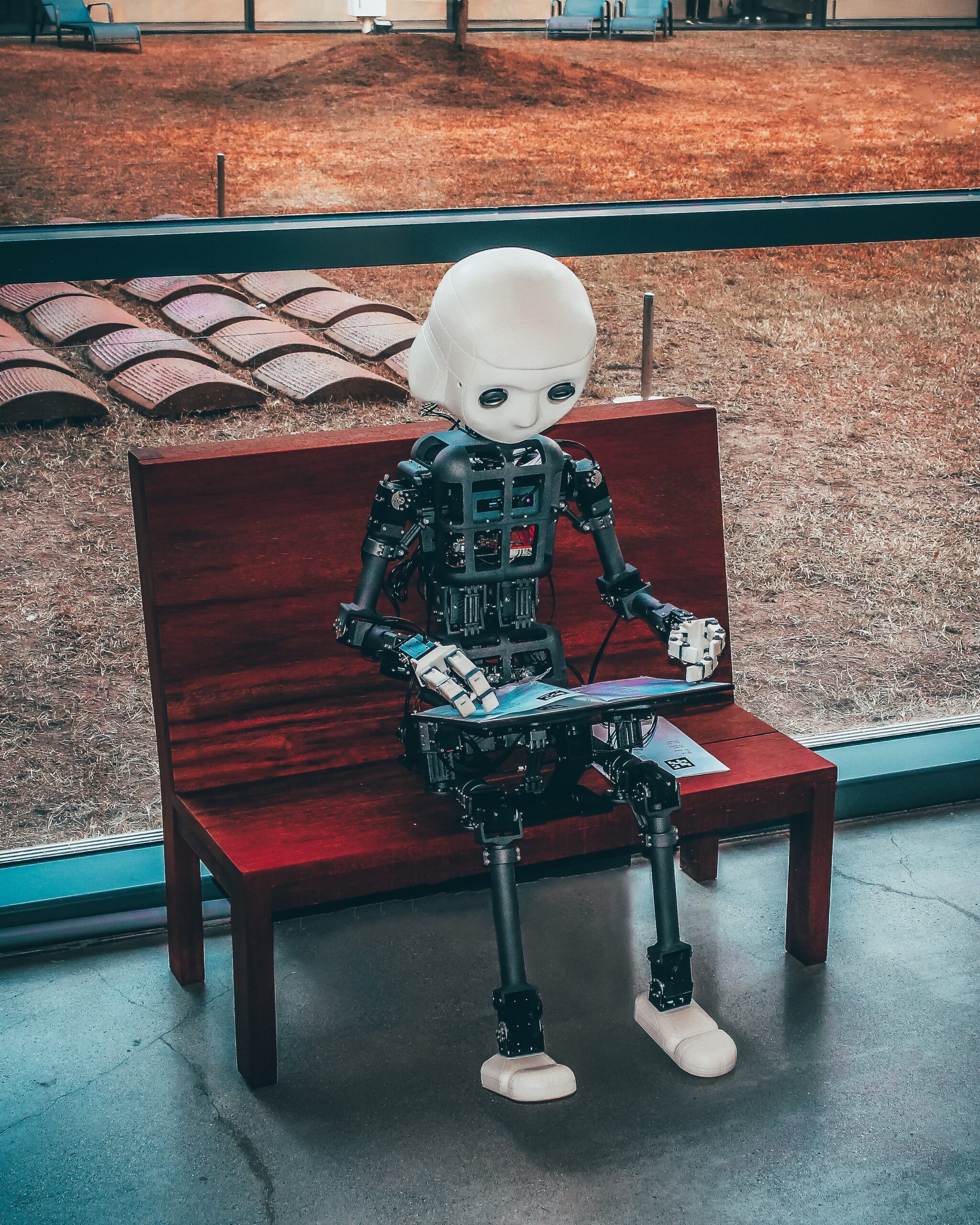

Artificial Intelligence

Photo by Andrea De Santis on Unsplash

Looking for a job? In addition to encountering those annoying never-ending job interviews you may find yourself face-to-face with an artificial intelligence bot.

Companies worldwide increasingly use artificial intelligence tools and analytics in employment decision-making – from parsing through resumes and screening candidates to automated assessments and digital interviews. But recent studies claim that AI does more harm than good.

While AI screening tools were developed to save companies time and money, they’ve been criticized for placing women and people of color at a disadvantage. The problem is that many companies lack appreciable diversity in their data set, making it impossible for an algorithm to know how people from underrepresented groups have performed in the past. As a result, the algorithm will be biased toward the data available and compare future candidates to that archetype.

The City’s Automated Employment Decision Tools (AEDT) law is designed to offset the potential misuse of AI and protect job candidates against discrimination. It was enforced on July 5th, 2023 in New York City – with other cities and states expected to gradually follow suit. Employers must now inform applicants when and how they encounter AI. Furthermore, companies have to commission a third-party audit of the AI software used, and publish a summary of the results to prove that their systems aren’t racist or sexist. Job applicants can request information regarding what data is collected and analyzed by the AI. Violations of the law can result in fines of up to $1,500.

Replacing Human Hiring Decisions

However, should a job applicant want to opt-out of such impersonal assessment by a bot, the new law’s scope is quite limited.

While the law specifies that instructions for requesting an alternative selection process must be included in the AI screening disclosure, companies aren’t actually required to use other screening methods. Not to mention that the law only applies to AI in hiring and not any other employment decisions. It also wouldn’t apply if the AI, for example, flags candidates with relevant experience, but a human then reviews all applications, making the ultimate hiring decision.

Some civil rights advocates and public interest groups argue that the law isn’t extensive enough and that it’s even unenforceable. On the other hand, businesses say that it’s impractical, costly, and burdensome, and that independent audits aren’t feasible.

Responsible use of AI in hiring

Although this law may be a good first attempt to assign more regulatory guardrails around AI, it remains to be seen if it ensures the responsible use of AI in hiring processes. At the end of the day, perhaps recruiting talent should remain a human-made decision.

The good news is that AI can help companies without harming potential job candidates in many ways – such as connecting new employees with internal organizational information and company benefits during onboarding. Or helping employees to do their jobs more effectively rather than replacing them.